What designers need to know about... RAG

What is RAG?

Retrieval-augmented generation (RAG) is a technique for improving the accuracy and reliability of generative AI models like GPT-4 and Gemini.

Unlike old-school models like GPT-3 which are limited to their original training data and whatever information the user included in their prompt, RAG-supported models augment their outputs with relevant external data retrieved specifically for the prompt.

Imagine it like this:

Generative AI is a mixmaster DJ busy on tour, and RAG is a record-obsessed assistant who trawls the internet for the best, fresh tracks for whatever vibe you want.

The DJ wants some fresh tracks to play for their upcoming set opening for Tyler, The Creator (the user) but has been touring endlessly since September 2021 with no access to the internet. Tyler has specifically asked the DJ to play a “spicy set of fresh material” much to the DJ’s dismay.

They’ve got no idea what’s happened musically since 2021 and their track selection is limited to what’s in their record box!

Lucky for the DJ, RAG can step in and search through the endless internet in real-time to grab material from Tyler’s 2024 album Chromakopia as well newly released tracks from similar artists. Just what Tyler asked for.

This ability of RAG-supported generative AI models to pull in external data sources “live” like this offers users way more value.

So how does RAG benefit users?

RAG-supported generative AI models can boost user satisfaction by up to 20% compared to traditional models. Here’s how:

Accuracy

RAG enables AI models to fulfill user instructions more accurately by retrieving relevant, recent data and displaying it with sources (shoutout to Perplexity for leading the pack here).

This is a huge step up from older models like GPT-3, which often provided vague, superficial outputs delivered overconfidently, with no evidence of their reasoning.

Heightened accuracy and transparency have opened the door to use cases in high-stakes environments like medicine and law, where RAG-powered systems can cite specific, recent journal articles, improving perceived trustworthiness.

Note: RAG-powered systems still hallucinate, but up to 30% less compared to traditional models.

In other words GPT-3 was dropping 6 hits of acid every weekend, where GPT-4o is just “microdosing”. 😉

Efficiency

By generating more relevant, accurate, and precise outputs, RAG removes friction for users. When users get answers more closely aligned with their intent, they save time (35% less, according to IBM) and effort while achieving higher task success rates.

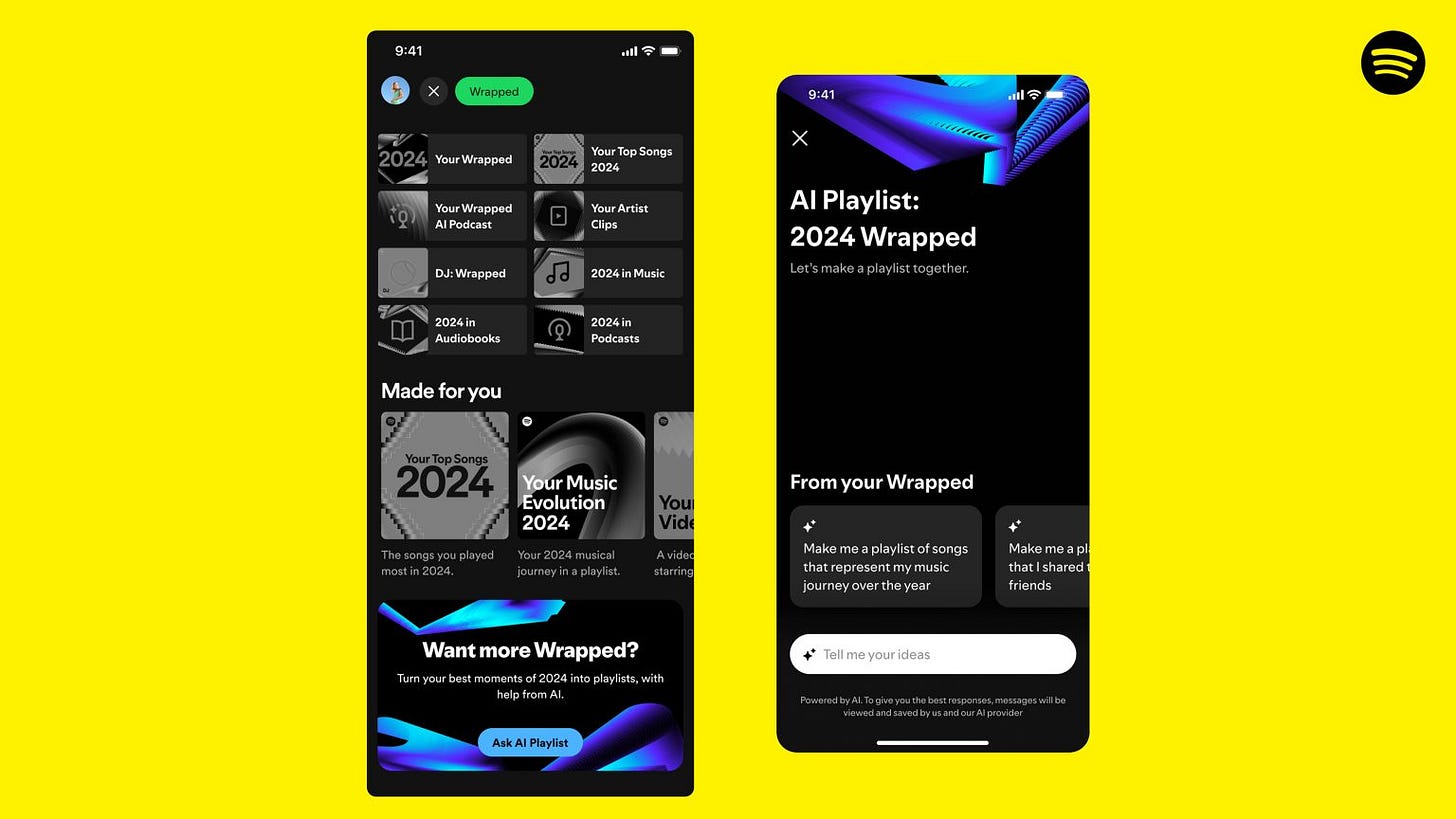

Spotify’s “wrapped” feature is a solid example. It pulls up-to-date context on each user’s recent listening preferences to help them create fresh, personal playlists.

Older systems without RAG would only have access to static training data, missing out on evolving trends and tastes.

Personalization

Indeed, this heightened contextuality of RAG helps models adapt dynamically in real time to users’ individual needs and preferences.

Going back to our Spotify-wrapped example, RAG helps leverage all that gathered user data to deliver on-brand, ultra-personalized playlists (like “Your Music Evolution 2024”) based on their music taste over the last year.

It feels personal because it is—the model actively retrieves and tailors its output to you.

So how can designers help improve RAG model experiences for users?

Guiding users to offer better instructions

RAG models perform best when users provide clear instructions to communicate their intent. If the prompt fails to point the record-obsessed assistant in the right direction, they’re less likely to achieve their goal.

Designers can help by creating patterns that reduce user friction and guide input clarity. For example, writing open-text prompts demands significant effort, but point users to attach relevant images or documents can elevate the quality of the input. Loads of AI users still aren’t aware of this.

For instance, after starting writing an open text prompt, Gemini for workspace offers suggestions that anticipate a user’s intent.

Explaining the retrieval process

Trust is a big deal when it comes to user adoption. If users don’t trust an AI model or feature, they won’t use it. But how can users trust something when they have no idea how it works?

Designers can bridge this gap by explaining the retrieval process. For instance, RAG-supported systems that display their sources (shout out to Perplexity for leading the pack here) give users a behind-the-scenes look at how the AI arrived at its output. Simple visual cues, like expandable “view sources” buttons or confidence indicators, can make a massive difference in building trust.

Want to dig deeper into this topic? Read this piece from IBM.

Helping users identify and address errors

Since RAG models can still make mistakes, users benefit from features that flag incorrect outputs or verify sources. For example, a legal research tool could include a "validate source" button, enabling users to double-check the credibility of retrieved data. Similarly, fallback messages like "I couldn’t find anything, but here’s what I tried searching for…" can help users feel more in control of the interaction.

Wrap up

RAG represents a massive leap in how AI models retrieve and generate information, offering users more accurate, efficient, and personalized experiences.

As designers, we’re uniquely positioned to tailor these experiences to user’s actual needs—from guiding input clarity to building transparency into outputs.

//Pete